PacCam: Pacman controlled with your face

Chomp to move, look to turn

Nov 1, 2024

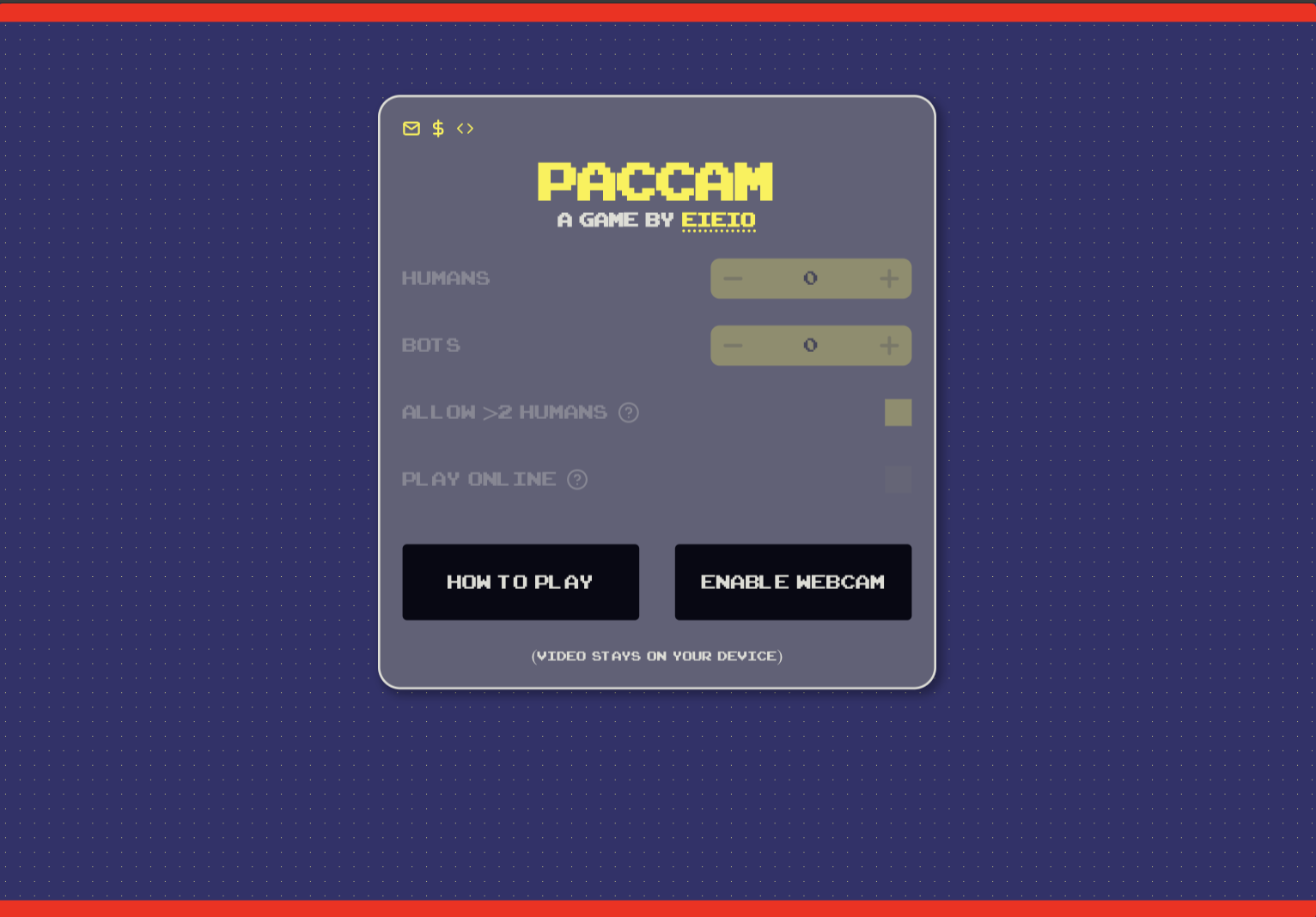

I made a game. It’s called PacCam. It’s Pacman, but you control it with your face.

Looking very cool

You can play it here (or read the code).

You chomp your mouth to move and turn your face to steer. You look…pretty dumb while playing it. At the end it tries to give you the dumbest possible gif of yourself (it tracks when your mouth is the most open, and builds the gif around that moment).

I could fill a book with dumb gifs of me after all the testing I did

I’m really pleased with how the game turned out, but making it was way more work than I expected. Let’s look at why.

Wait, how does this work

Good question.

- I use MediaPipe’s Face Landmarker for face tracking. MediaPipe 1 is a suite of ML tools from Google that come with good prebaked models for tracking humans.

- I use React to display the UI, players, etc.

- The core game logic is a tiny (~3k lines of code) imperative game engine that I wrote and wired up to React.

I’ve always found the name weird? Like to me MediaPipe is “the easy way to track faces and hands in the browser” but it has such a generic name. idk. Still grateful Google released it.

I use a couple of additional tools like Framer motion, radix, GIF.js, and styled-components. But nothing crazy. The game is largely Just Code.

So the high-level answer to “how does this work” is mostly: MediaPipe is an easy way to do face tracking in the browser and otherwise this is just a website on the internet. We live in the future!

That said, there were some challenges gluing all this tech together. The biggest early challenge was getting React to play nice with MediaPipe.

MediaPipe and React

Here is a simple model of how you use MediaPipe:

- You have a video element on a webpage hooked up to a webcam

- You point MediaPipe at that video element 2

- MediaPipe gives you information about the faces in the video element on every frame

I think this is harder than it should be (largely because MediaPipe tries to be generic instead of opionated) and am planning to release some helper code to make it easier.

“Information” means the location of “landmarks” (e.g. the tip of your nose) as well as the value of “blend shapes” (e.g. how open your mouth is). But the point is, MediaPipe sprays lots of data at you (think hundreds of floats tens of times a second).

Here is a simple model of how you use React:

- You tell React how to compute your desired UI based on the current data that you have

- When that data changes, React automatically updates the UI

So maybe you can see a potential problem here. Naively combining React and MediaPipe and stuffing all your MediaPipe state into React causes React to do lots of updates. This gets slow.

My first React / MediaPipe project solved this with a bunch of convulted React code that was very careful about when it updated React state (which triggers an update), and relied heavily on what React calls “refs” (values that can be updated imperatively without triggering an update). It was a huge mess, very hard to think about, and still pretty slow.

To solve this issue for PacCam I took a different approach: I put all my MediaPipe logic in a plain javascript file and had the relevant React components subscribe to updates - for example, the PacMan component listens for updates around which direction you’re looking and whether your mouth is open.

I originally thought binding React code to non-React code would be a challenge, but it ends up being pretty simple. A toy implementation looks like this:

class MediaPipeEngine {

doFaceLandmarkThings() {

// get facelandmarks somehow

const faceLandmarks = this.getFaceLandmarks();

// This depends on your current jaw open state, because we

// use different thresholds for opening and closing your mouth

const jawOpen = this.calculateJawOpen(faceLandmarks);

this.notifyJawOpenSubscribers(jawOpen);

}

subscribeToJawOpen({ id, callback }) {

this.jawOpenSubscribers.push({ id, callback });

}

notifyJawOpenSubscribers(jawOpen) {

this.jawOpenSubscribers.forEach(({ callback }) => callback(jawOpen));

}

unsubscribeFromJawOpen({ id }) {

this.jawOpenSubscribers = this.jawOpenSubscribers.filter(

(subscriber) => subscriber.id !== id

);

}

}

function Component({ mediaPipeEngine }) {

const [jawOpen, setJawOpen] = React.useState(0);

const id = React.useId();

React.useEffect(() => {

mediaPipeEngine.subscribeToJawOpen({ id, callback: setJawOpen });

return () => {

mediaPipeEngine.unsubscribeFromJawOpen({ id });

};

}, [mediaPipeEngine, id]);

// do something based on jaw being open

}

I found this to be a much easier approach than forcing everything into React; it’s a very natural way to have inherently imperative logic update your UI.

Games and React

Here is a simple model of a game engine backend

- You tell it where some stuff is and what that stuff does

- In a tight loop, the game engine updates where everything is and what its doing

- It gives you information about where all the stuff is on every frame

Here is a simple model of how you use React

- You tell React how to compute your desired UI based on the current data that you have

- When that data changes, React automatically updates the UI

So maybe you can see a potential problem here. Maybe you can see that it’s very similar to the MediaPipe problem we just talked about.

So you might ask: can we solve the problem in the same way? Well - yes, we can! My gameloop notifies a pacman component of its position by doing something like this:

class Engine {

startGameLoop() {

const loop = () => {

this.calculatePlayerPositions();

this.notifyPlayerPositionSubscribers();

requestAnimationFrame(loop);

};

requestAnimationFrame(loop);

}

subscribeToPlayerPosition({ id, playerNumber, callback }) {

this.playerPositionSubscribers.push({ id, playerNumber, callback });

}

notifyPlayerPositionSubscribers() {

this.positions.forEach(({ x, y, playerNumber }) => {

this.playerPositionSubscribers

.filter((subscriber) => subscriber.playerNumber === playerNumber)

.forEach(({ callback }) => callback({ x, y }));

});

}

unsubscribeFromPlayerPosition({ id }) {

this.playerPositionSubscribers = this.playerPositionSubscribers.filter(

(subscriber) => subscriber.id !== id

);

}

}

function Pacman({ engine, playerNumber }) {

const [position, setPosition] = React.useState({ x: null, y: null });

const id = React.useId();

React.useEffect(() => {

engine.subscribeToPlayerPosition({

id,

callback: setPosition,

playerNumber,

});

return () => {

engine.unsubscribeFromPlayerPosition({ id });

};

}, [engine, playerNumber, id]);

// move based on position

}

In addition to making it easier to control the state updates that React gets, this is a much easier way to run a game loop at all in React. One of the rules of React is that a function is redefined whenever the data that it references changes. This makes lots of sense for UI updates, but it’s a huge pain for a game loop. My game loop needs to reference lots of data that it’s also updating - but I don’t want to redefine the game loop on every frame. With this pattern I don’t have to.

What else was hard

Well, I spent a lot of time writing my little engine and making the controls feel good and making a tutorial and all of the other things that go into making a game.

But to be honest, “making a website” was a whole lot more challenging for me than the imperative backend-y game engine stuff. I’ve been a backend software engineer for something like 12 years. I learned how to use Flexbox like 8 months ago. The web is hard! But I’m not sure how to turn my webdev challenges into a good blog post - I’m not sure you want to read about me learning that backdrop-filter needs a vendor prefix to work on Safari.

But let’s talk about some things that I found interesting.

What did I find interesting

A lot! Too much! Too much to put in a blog post. But here are a few highlights that we can talk about:

- Adding bots (which I did way too late)

- Making the game work on any display size

- Tweaking the game mechanics

- Figuring out how to teach people the controls

Adding bots

I added bots to PacCam about a week before finishing it. I originally wasn’t planning to have bots at all.

Waiting to add bots was a mistake - and releasing without them would have been an awful idea.

I added bots to make it easier for some friends to test the game solo, and that gets at why having bots is so important: people often don’t have someone to play with! I was thinking of PacCam as a local multiplayer game (and that’s how I did all my early testing) but…local multiplayer games are not in a great place these days! There’s a reason games mostly do online play!

But in addition to improving the final product, bots made testing the game - looking for bugs and thinking about balance - easier. Before adding bots I did all my testing using a gamemode that let me control multiple players with a single face (ok for finding bugs, awful for balance testing).

4 bots going at it. My money's on pink.

So anyway. Bots are great. How do they work?

My bot logic relies on composing a couple of handy primitives:

- A

plan- like hunting other players or eating dots (and corresponding logic for executing the plan) - A function

fthat generates a random bounded timestamp in the near future - A function

gfor making a weighted random choice from a list of choices

Every game tick, bots check whether they’re allowed to update their plan (bounded by f) and potentially do so; for example, if a bot is now capable of eating other players, they will probably transition to the hunting plan.

When executing a plan, bots potentially update the target of their plan (e.g. the player to hunt, or flee from, the dot they want to eat) bounded by f. When they choose a target they do so using g. For example, when a bot is hunting other players, it computes its distance to all other players and turns that into a score that is 1/(distance**3) (higher is better). And then it makes a weighted random choice from that list; if there are two players who are 1 and 2 units away from the bot, it will hunt the closer player 8 times out of 9.

In practice that looks something like this

class BotStateMachine {

maybeUpdatePlan({ now, superState }) {

if (superState === "am-super" && this.plan !== PLAN.HUNTING) {

// start hunting players after eating a power pellet

// - but not immediately

const shouldMoveToHunt = this.pastRandomNearFutureTimestamp({

currentTime: now,

stateKey: "movedToHunting",

targetFrequency: 350,

});

if (shouldMoveToHunt) {

this.plan = PLAN.HUNTING;

}

}

// other update logic

}

maybeExecutePlan({ now, position, playerPositions}) {

if (this.plan === PLAN.HUNTING) {

const canPickNewTarget = this.pastRandomNearFutureTimestamp({

currentTime: now,

stateKey: "lastHuntTargetChange",

targetFrequency: 2250,

});

if (canPickNewTarget) {

// If the another player is close, we should be allowed to

// chase them instead. But only sometimes

const distanceToOtherPlayers = this.distanceToOtherPlayers(

position,

playerPositions);

this.target = this.weightedRandomChoiceFromList({

list: distanceToOtherPlayers,

logKey: "choose hunting target"});

}

const canReorient = this.pastRandomNearFutureTimestamp({

currentTime: now,

stateKey: "lastHuntReorient",

targetFrequency: 500,

});

// By default, this moves in the current direction

// until we overlap with the target in the relevant

// axis (e.g. move up till we overlap horizontally).

// `canReorient` lets us pick a new direction even

// when that's not true, to keep things interesting

this.orientTowardsTarget({

position,

target: player.position,

distanceScaleFactor: 2.5,

targetState: this.huntingState,

pickNewEvenIfAlreadyChoseDirection: canReorient,

targetDirection: player.direction,

});

}

// other execution logic

}

}

And here are the helper functions

// Weighted choice from `list` respecting score

weightedRandomChoiceFromList({ list, logKey, scoreScaleFactor = 1 }) {

if (list.length === 0) {

console.warn(

`Asked to choose a direction but no valid choices (key: ${logKey})`

);

return null;

}

const scaled = list.map((item) => ({

...item,

score: item.score ** scoreScaleFactor,

}));

const total = scaled.reduce((acc, item) => acc + item.score, 0);

const rand = Math.random() * total;

let runningTotal = 0;

for (let i = 0; i < list.length; i++) {

runningTotal += scaled[i].score;

if (rand < runningTotal) {

return list[i];

}

}

console.log(

`NO RETURN?? ${logKey} ${rand} ${runningTotal} ${total} ${JSON.stringify(list)} | ${JSON.stringify(scaled)}`

);

}

// Pick a time in the near future at which something can occur

pickRandomNearFutureTimestamp({

currentTime,

stateKey,

targetFrequency,

jitterFactor = 0.25,

runOnSuccess = () => {},

}) {

if (!this.smoothRandomState[stateKey]) {

this.smoothRandomState[stateKey] = {

lastTimeSomethingHappened: currentTime,

targetDelta: null,

};

}

const lastTimeSomethingHappened =

this.smoothRandomState[stateKey].lastTimeSomethingHappened;

if (this.smoothRandomState[stateKey].targetDelta === null) {

let threshold = targetFrequency;

const smoothVariation = Math.sin(currentTime / 1000) * jitterFactor;

const randomVariation = (Math.random() * 2 - 1) * jitterFactor;

threshold *= 1 + (smoothVariation + randomVariation) / 2;

this.smoothRandomState[stateKey].targetDelta = threshold;

}

const delta = currentTime - lastTimeSomethingHappened;

if (delta > this.smoothRandomState[stateKey].targetDelta) {

this.smoothRandomState[stateKey].lastTimeSomethingHappened = currentTime;

this.smoothRandomState[stateKey].targetDelta = null;

runOnSuccess();

return true;

}

return false;

}

I really enjoyed thinking in these terms. Separating “what are we doing” from “how do we do that” kept the logic simple, as did having a single answer to “how does the bot make a decision and when does it change its mind.”

I think adding this randomness also makes the bots feel more human - they make reasonable decisions most of the time, but sometimes they chase a player that’s far away or try to run across the screen to grab a dot that they saw.

This logic is also full separate from the game engine; all the bot state machine can do is tell the game which direction a bot is facing and whether its mouth is currently open. I never tried integrating the bot logic directly into the game engine, but I have to imagine it would have been a headache.

Working on any display size

Getting sites to work across display sizes stresses me out. This makes sense - it’s a hard problem and I’m pretty new to webdev.

The problem was particularly challenging for me with PacCam because it’s more of an application than a site. I wanted the game to run full screen, never require scrolling, and be played on top of a webcam that filled the entire screen.

“Make a video element fill the screen” turned out to not be that bad here, relative to some of my other MediaPipe work 3 - the video element lives in a wrapper div with width and height both set to 100% 4. The video element also has width and height set to 100%, and object-fit set to cover. This expands the video element to fit its container without distorting it.

In particular - the approach I’m going to describe doesn’t work if you want objects to directly track someone’s hands or face - e.g. if you want a picture to follow your finger around - because it potentially results in some of the video not being visible, which means that the coordinates that MediaPipe gives you need to be adjusted.

Doing this requires that you set height: 100% on your body and html

elements.

This approach cuts off some of the webcam if its dimensions don’t match the dimensions of the screen, but for PacCam I think that’s ok: your window is probably close-ish to your webcam dimension, and the game can still track you if you’re in the webcam frame but not visible on screen.

the game doesn't handle adjusting the window well, but the webcam looks ok!

The bigger challenge here was determining how big the game elements should be (and how many pellets should be in a row and column). The approach I took there was a little messy. When the game loads, I:

- Check the width and height of the window

- Take the larger of the two dimensions as the “primary” dimension

- Have a hardcoded number (21) of “slots” in the primary dimension (this is arbitrary; I just thought it felt right)

- Divide the primary dimension size by that number to determine our “slot” size

- Determine the number of slots in the smaller dimension by dividing it by the slot size (and flooring it) 5

- Compute the size of pellets, pacman, etc in terms of slot sizes

- Add spacing elements in the secondary dimension so account for any partial slots

This makes the game unplayable on windows that are very small in one dimension, but the game would already be unplayable in those conditions so that seems fine.

To make that concrete, lets say that our window is 2100x1450.

- Width is > height, so width is our primary dimension

- 2100 / 21 = 100, so slots are 100x100 and we have 21 columns

Math.floor(1450 / 100)is 14, so we have 14 rows.- Pacman is 2 slots large, so it is 200x200

- We add 25px tall spacing elements to the top and bottom of the grid

I've made the spacing elements red here; they ensure our height has a round number of 'slots'

Since these calculations are done at page load time, the game totally breaks if you resize the window while playing. Don’t do that! I wasn’t sure how to address this (since recalculating on window resize would change the number of pellets we should display).

This approach still feels a little awkward and messy to me, but it seems to work fine in practice and I really like having the game fill the screen regardless of dimensions.

Tweaking game mechanics

A big part of designing some games is picking good numbers. And a thing I was surprised to learn last year is that I hate picking good numbers.

This is surprising to me because I like numbers! And I like playing games with good numbers! So I don’t really like doing this, and I struggle to find the energy to pick my numbers in principled ways.

I mostly dodge this problem by building games that don’t need good numbers: I’m much happier thinking about how to nudge humans to not be jerks. But PacCam needed some numbers.

And I…did not pick my numbers in a principled way! Shocker! I found my numbers by picking something, playing a lot solo, adjusting, playing with friends, adjusting, and then playing some more. Numbers I picked this way include:

- Player speeds (including the fact that you move faster after eating a power pellet)

- Scores for pellets, fruit, and eating other players

- The number of power pellets to have on screen at once

- The speed at which power pellets respawn

- Thresholds for whether a player overlaps with something they’re trying to eat

- Probably other things

Sorry if you don’t like the numbers I picked. Feel free to send me a pull or fork the code.

Teaching the controls

When I explain PacCam I ask people some simple questions - are they familiar with the videogame Pacman? Are they familiar with the human face?

They consistently say yes; most people are familiar with Pacman and know what the human face is. I explain that my game straightforwardly combines those things. They nod.

But it turns out that putting your knowledge of the human face into controlling Pacman is hard! That’s for a few reasons.

- It is very hard to look at the screen while trying to turn your face

- It is tempting to turn your face so far that Mediapipe loses track of your face

- The game wants you to open your mouth pretty wide, but people tend to do small and fast chomps

I quickly picked up on this during playtesting and learned how to give people the right intuitions for the controls. I’d stress that you only need to make small face movements and that you should try opening your mouth more but slower - and that worked pretty well.

But transmitting that information to strangers on the internet is harder. I tried to address this in a few ways:

- Tweaking my face detection thresholds constantly - did you know that it’s harder to open your mouth when you’re looking down? The game accounts for that.

- Adding instructions that give a written version of my advice

- Adding a tutorial that nags you if you turn your head too far (I’m not sure how useful it is)

- Recording a video of my disembodied head controlling the game

well, the game's not meant to make you look *flattering*

Recording the video was pretty fun. I originally recorded it with a white background, but that put an ugly white blob on the how to play screen. The white wasn’t quite consistent enough to trivially edit out, so I reshot the tutorial with a super jank greenscreen:

This made it pretty easy to edit out the background to make a “transparent” video - until I realized that the Mp4 I exported it as didn’t support transparency. So to the video is actually played in a hidden video element and copied to a canvas that alphas-out the relevant pixels.

After doing all of this I realized I could have just changed the game to have a green background instead of showing the webcam in the background, removing the need for a physical greenscreen. But this was more fun anyway.

Wrapping up

So that’s it! That’s PacCam. It’s the most “normal” game I’ve made in like a year (that is, it’s a playable game that isn’t also a large scale experiment about human behavior). I hope you enjoy it!

To be honest, I’m a little nervous that I spent too long on the game relative to the number of people who will play it. I’m not sure that games like this are my strong suit. But I learned a whole lot about web development while making it and had fun along the way, so it’s hard to be too sad :)

If you’re interesting in mediapipe, I’ve got a bunch of undocumented examples of how to use it on Glitch - I’m planning to turn those examples into a proper library / blog post.

And I’ll be back with more stuff soon!